My side project for the last two months has been putting together what has ended up being docker based website fronted by Kong. (With a docker image repository thrown in for good measure.) It’s been a bit of an odyssey to be honest. I didn’t start out meaning to create this monster; it was just supposed to be a Drupal site running on docker but as I worked out the development and maintenance processes around it little details made me gradually add complexity. It might be a bit of a monster but it is my monster it works well for me. There is a bit of an Ikea effect going on here but this is also going to give me a platform for me to put future projects together so I am pleased with the whole deal.

What I ended up with

The host

From a hosting prospective I always thought I would get an AWS account and spin up virtual hosts. I looked in to it but couldn’t figure out how much it would cost, more importantly I couldn’t find anyway to cap my payments. If anyone could accidently create a process that runs up a million-pound debt online it has to be me! I also investigated Azure and Google Compute but none of them offer a billing cap. I just want it to cut off the servers at some point.

My existing site was on a virtual server hosted by a company called Memset. I had one of their standard VPS servers - with no support. I chose the smallest spec possible. You can get these without support for £3.50 a month which seemed simple and clear, and they have a great online console. I decided to get myself another one for this project. (I plan to delete the old server when migrate over.) https://www.memset.com/dedicated-servers/standard-vps/

I set the machine up by installing docker and creating a Swarm. It’s a swarm of one node right now but I can expand it later. I used Ansible to create the setup processes - so all the configuration scripts can live inside gitlab.

The stuff running on the host

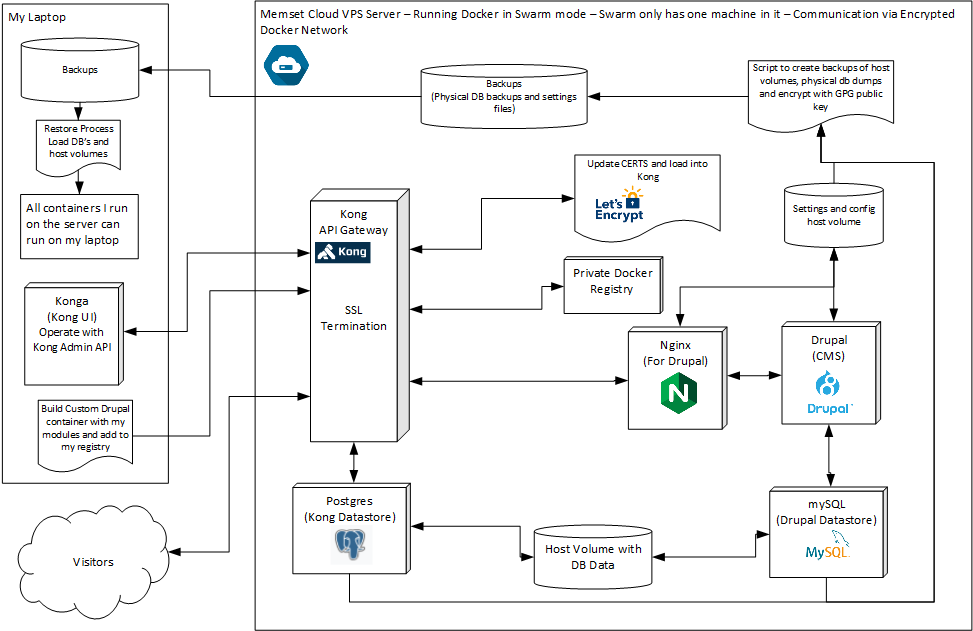

I tried to show most of the components and processes in the image above. I have Kong acting as the main gateway and providing SSL. This was better than having SSL in individual containers with certs and keys shared via docker secrets because the maintenance overhead is a lot lower. It enables me to update certificates via the Kong API with zero downtime. Drupal needed it's own nginx server to take advantage of php-fpm. It would be possible to have a custom nginx config in Kong but I think it's less complicated for Drupal to have it's own nginx. I was also able to create a backup process which takes copies of all the data, encrypts with a public key. These files can be transferred to my laptop and I can create an exact copy of the site to work with locally. Finally I needed to have a private docker registry to share the custom Drupal container I am using.

Why two databases?

Kong only supports Postgres or Cassandra, and Drupal supports Postgres and mySQL. I already had the Drupal setup working with mySQL when I introduced Kong which is why they are on different databases. It seems to be running fine with two different databases but I am considering migrating the Drupal site over to Postgres so that I only need a single database server. I am not sure it is worth it though because my next project involves setting up a Cassandra cluster and I may end up migrating Kong to that.

Features

- SSL with lets encrypt (and auto renew)

- GPG encrypted backup process

- Completely replicable on my laptop (Encrypted backups can be restored.)

- Works with multiple domains

- Most server process operated remotely via ansible

- Kong Admin API exposed so that I can run Konga on my laptop to manage the enviroment

What I learnt / Bumps I had to overcome

(Lots of detail in the sections below. If I was the reader I would get bored and stop reading here - I think you have passed the important high level bits!)

Drupal 8 as a container

I think not all web applications are built in a way that works elegantly with Docker and Drupal seems to be one that really isn’t there yet. I found it hard to separate the ‘code’ and the ‘data’ portions of the container and this makes updating to new versions difficult. I settled on mounting the ‘sites’ directory in a host volume. This has worked through two minor version updates, hopefully it will continue to do so.

My process to install a new module or update the Drupal version is to build a new version of the container and deploy this to my repository. I then need to update the version indicator in the compose file and re-deploy.

Failing to get automated backup to my google cloud storage

Using a public GPG key to encrypt the compressed backup files is very useful and I spent a while trying to find a method to automatically transfer each new backup to my Google cloud storage. The problem was I did not want have credentials to my Google account on the server and I also preferably wanted it to be a one way drop operation. I wanted to use my Google storage since I have built up space there. Despite research I was unable to find a method to achieve this.

Building custom containers in situ didn't work - I need a repository!

Ok, so I tried these steps on my single node docker Swarm:

- Build new docker image

- Deploy image via a compose file and docker stack deploy

- Check it's all running ok

- delete the stack (docker stack rm)

- double check the container instance has stopped and is now deleted

- delete the docker image

- re deploy the stack

- Be surprised because the deleted image has magically come back to life!

This is a pretty annoying result - if the image and all containers using have been deleted it should be gone. I should get an error when I try and start it. The reason I want it to be gone is because I want to build a new version of the image (e.g. with a new version of Drupal) and use that. Even if I went ahead and built the new version of the image Docker swarm was still launching the old version. I don't understand why it does this but I think it may be due to the fact that Docker swarm uses some kind of caching and the images still exist in some form. Docker has some purge commands that do resolve the scenario but the process isn't smooth.

This means I must use tags to version my containers - and I need to keep track of the version numbers! I have methods of keeping version numbers and tagging with the next version automatically but the system needs to work both on my development laptop as well as the server. Keeping the version numbers in sync between laptop and server would be complicated. What I really need is a proper build process where I build tag and version the images and have the server pull the right version.

As I can't build the containers in situ, I need a docker registry to act as a source of truth for the images and allow them to be moved to the server. I could use docker hub for this but as the Drupal image is specially built for my site it isn't useful to anyone else. This lead me down the path of setting up my own docker repository. Since I had Kong and a Letsencrypt process already it was a simple matter of using the docker provided container exposed via Kong

I hate x_forwarded_* headers - espically x_forwarded_proto

On several occasions I have had to put web applications behind reverse proxies. Web applications give html/javascript to browsers which have to contain onward links to other pages in the same application. Even applications that are mostly nice and have relative links sometimes use 30X redirects which contain the full url’s. These applications break behind reverse proxies because the address of the server is different to the URL the browser uses to get to the application.

My preferred way for applications to deal this problem is to have configuration parameters which specify what the end user external URL should be. It’s quite simple you set one configuration option and your done! Drupal 7 did this ?, but I was using Drupal 8 ☹. Clever Drupal 8 developers removed this functionality and now it ‘intelligently’ reads the x_forwarded_* hearers to determine what the URL is supposed to be. Well it does but only afer you activate its reverse proxy mode and give it a list of IP addresses of the reverse proxy to prevent IP spoofing. This is great – unless you are putting this all inside Docker – hence you don’t know what the IP addresses will be. In this case you must use a lesser known option to get it to listen to any IP. The IP spoofing security risk is mitigated by using an encrypted docker swarm network. That’s two days of my life I will never get back!

Of course this only works if the x_forwarded_* headers are set correctly by Kong. Most of them were but the x_forwared_port header was being set to 8443 not 443. Although the Kong container exposed port 443 it was mapped internally to port 8443 and this is the header that Kong used. I tried using the request transformer plugin to add the header but no luck. I ended up having to research how to configure the Kong docker image to launch Kong on port 443 to get the whole thing working. (A temporary workaround was to reconfigure Drupal to use an erroneous x_forwarded_port header so it didn’t pick up the received value.)

Getting Kong to talk to Drupal should have been the simplest part of this whole process. It’s frustrating when the simple things turn out so hard!

Kong can't host https whilst redirecting http (unless I want a custom nginx config - which I don't!)

Kong is a very powerful and flexible tool but there are two limitations I have found. You can make it listen to many ports but you can’t let it route requests depending on the incoming port. I just assumed you could do this since it seemed reasonable to expect. The other thing I assumed you could do is route connections depending on whether they are http or https. You can have a service respond to both http and https. You can have it respond to only http and you can have it respond only to https. You can’t have one service respond to http and another to https. (You can enter this as a configuration – it just won’t work!) So my automatic re-route http calls to https didn’t work – Kong just gives an error – Please use HTTPS.

There is a git issue about this, some suggest that Kong can have an option to use 30X redirects. Based on the replies I think they are not providing this option on religious grounds. Personally, I don’t care what the proper way of running a web server is, I want to forward my users to https and this is my server! I believe it may be possible to introduce a custom Kong config to achieve this but I like using the bog standard Kong docker image so I haven’t decided what to do about this yet.

Kong admin API via Kong

Kong has an admin API. I have Konga running and previously I had always put it inside the same swarm as Kong. This time I configured it to run on my local laptop and connect to the Kong admin API. Kong has quite a neat way of using itself to expose the API. That way I was able to add https and an API key.

Drupal 8's lack of mobile Friendleness

I have put together a few applications using the Quasar framework. (https://quasar-framework.org/) It’s a set of libraries including vueJS which allows you to put together responsive web applications which look Android and iPhone native when run on those platforms. I had forgot how far back Drupal was in comparison. I tried looking at the Drupal mobile guide (https://www.drupal.org/docs/8/mobile) on my mobile – it doesn’t look pretty! I toyed with the idea of using Drupal as a content database and exposing pages to a Quasar app via an API but that’s overkill. I have looked into adding some modules to Drupal that will allow me to configure blocks appearance depending on whether the site is mobile or desktop but the module isn’t released on Drupal 8 yet and in any case it is not recommended to use it since it disables caching. In the end I decided that I am going to have to live without a mobile friendly site.

What's next?

My old server still has a Maven Repository running on it and I need to decide what to do with if before my term expires. I think I will be just shutting it off. I want to use a Cassandra database cluster as part of my next project so I might be buying another two VPS' and running a cluster of three! Finally I am not totally happy with running relational databases (mySQL and Postgres) in Docker containers. I need to learn more about this and work out if there are better methods to run them. I might have to investigate using data containers as opposed to mounted volumes.

Finally I want to expose the Docker API via TLS. This is another requirement for my next project so it might be a good idea to get it working with Portainer on my laptop as a prerequisite.